Duplicate content refers to identical or very similar content appearing on multiple web pages or URLs. This can happen unintentionally due to various reasons such as content management system issues, URL parameters, or content syndication.

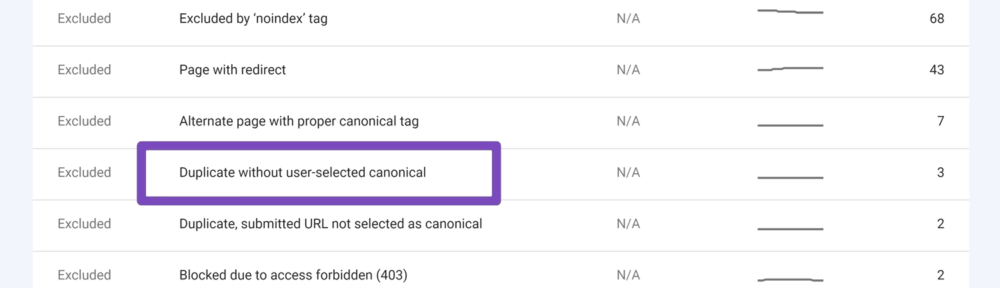

When duplicate content exists without user-selected canonicals, search engines may struggle to determine which version of the content to index and rank. This can lead to negative consequences such as diluted search engine rankings, reduced organic traffic, and potential penalties from search engines like Google.

Without a clear canonical URL specified by the user, search engines may arbitrarily choose which version of the content to display in search results, confusing both search engines and users.

Identifying Duplicate Content

Manual Identification Technique

Manual identification involves looking through your website’s pages to find instances of duplicate content. This means visually comparing content across different URLs or pages to see if they are the same or very similar. For example, you might open two pages side by side and read through them to check for any identical paragraphs or sections. Additionally, you can use search engines like Google to search for specific phrases or snippets of content to see if they appear on multiple pages of your site.

Once you’ve identified potential duplicate content manually, you can make a list of the URLs or pages where it occurs. This process may be time-consuming, especially for larger websites with lots of content. However, it gives you a thorough understanding of where duplicate content exists on your site, allowing you to take appropriate action to address it.

Automated Tools for Detecting Duplicate Content

Alternatively, you can use automated tools specifically designed to identify duplicate content on your website. These tools can efficiently scan your site and flag instances of duplicate content, saving you time and effort compared to manual methods. Popular tools include Copyscape, Siteliner, and Screaming Frog, which analyze your website’s content and highlight any duplicate or similar passages across different pages.

By implementing these automated tools, you can streamline the process of detecting duplicate content and gain insights into the extent of duplication across your website. These tools often provide detailed reports and visualizations, making it easier to understand the scope of the issue and prioritize actions to resolve it. Additionally, automated tools can regularly monitor your site for new instances of duplicate content, helping you maintain a clean and optimized website over time.

Common Signs of Duplicate Content

Identifying duplicate content can also involve recognizing certain patterns or indicators that suggest duplication across your website. One common sign is finding duplicate meta descriptions or title tags across different pages, which can confuse search engines about which page to rank. Similarly, if you notice content that is very similar or identical across multiple pages with only minor variations, it could indicate duplicate content issues.

Another red flag is when you have multiple URLs leading to the same or highly similar content. This can occur due to URL parameters, session IDs, or other factors that create multiple versions of the same page. Additionally, if you discover scraped or syndicated content on your website without proper attribution, it’s crucial to address these instances to avoid penalties from search engines and maintain the integrity of your site’s content.

Strategies for Fixing Duplicate Content

Consolidation Techniques

- Implement Redirects: Redirect duplicate URLs to one main URL using permanent redirects (known as 301 redirects), ensuring visitors and search engines are directed to the preferred version.

- Canonical Tags: Insert special tags called canonical tags into the HTML code of duplicate pages, indicating to search engines which page should be considered the primary source of the content.

Content Rewriting and Differentiation

- Rewrite Content: Rewrite duplicated text or articles to present the information uniquely, providing fresh perspectives or additional details.

- Differentiate Pages: Add distinctive elements to duplicated pages, such as additional information, images, or examples, making each page stand out and offer something unique to visitors.

URL Parameters and Parameters Handling

Configure URL Parameters: Use tools provided by search engines, like Google Search Console, to manage how parameters in your URLs are treated. This helps prevent search engines from seeing different URL variations as separate pages, reducing the chances of duplicate content issues.

Pagination and Sorting Solutions

- Implement Pagination Controls: Use special tags like rel=”next” and rel=”prev” to indicate to search engines the relationship between pages in a series, such as paginated content. This helps search engines understand the structure of your website and prevents each page in a series from being indexed as a separate duplicate.

- Implement Sorting Controls: Utilize canonical tags or noindex directives to address duplicate content resulting from sorting options on your website. By specifying the preferred version or instructing search engines not to index duplicate variations caused by sorting, you maintain control over which content appears in search results.

Risks and Consequences

Addressing duplicate content is crucial to mitigate these risks and maintain a strong online presence.

SEO Implications

- Decreased Search Engine Rankings: Search engines like Google prioritize unique and original content. When duplicate content exists across multiple URLs, search engines may struggle to determine which version to display in search results, leading to lower rankings for all versions.

- Dilution of Authority: Multiple versions of the same content can dilute the authority signals that search engines attribute to individual pages. As a result, the overall ranking potential of the content may be diminished.

- Negative Impact on Crawling Budget: Search engines allocate a finite amount of resources to crawl and index web pages. Duplicate content can waste this budget by causing search engines to crawl and index multiple versions of the same content, potentially leading to slower indexing of new or updated content.

User Experience Issues

- Confusion and Frustration: Users may encounter duplicate content across different URLs, confused about which page to visit for the desired information. This confusion can lead to frustration and a poor user experience.

- Reduced Credibility: Websites with duplicate content may appear less credible to users, as the presence of duplicate information may suggest a lack of attention to detail or quality control.

Potential Penalties from Search Engines

- Manual Actions: In cases of significant duplicate content issues, search engines may take manual action against the website, resulting in penalties such as demotion in search rankings or even removal from search engine results pages.

- Deindexing: Search engines may choose to deindex pages or entire websites that have pervasive duplicate content problems, effectively making them invisible to users searching on the web.

Conclusion

Fixing duplicate content without user-selected canonicals is crucial for a strong online presence. The strategies discussed here offer practical ways to tackle this issue effectively.

By using techniques like redirects and canonical tags, website owners can guide search engines to the preferred version of content, preventing confusion and consolidating rankings. Rewriting and differentiating content ensures that each page offers something unique, improving both search engine performance and user experience.